Hadoop and Spark, A comparison

Let us understand Hadoop’s architecture and components before understanding why spark eventually came into existence.

Google published, Google File System (2003), MapReduce (2004), and BigTable (2006). Apache Hadoop is an open source implementation of these 3 papers that leveraged the power of distributed and parallel computing.

Hadoop Storage: HDFS

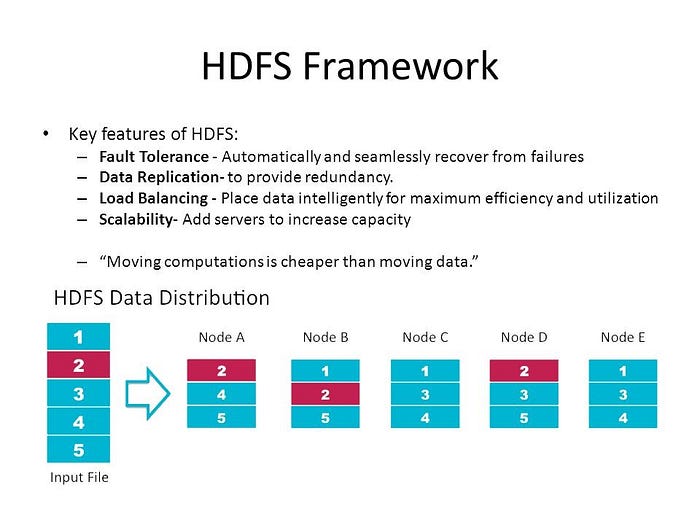

Hadoop Distributed File System (HDFS) is an open sourced version of GFS, and the foundation of Hadoop ecosystem. It was designed to addressing such large amounts of data was to create an affordable and secure file-system

HDFS key design philosophy

Tolerance to Failure: Cluster will fail or become unavailable at some point as the system is designed to run on commodity hardware, Hadoop solution for this is replication. It stores files in blocks, which are similar in size partitions of the file. These blocks are replicated throughout the cluster in a specific manner.

Security: It offers granular access control through file-level permissions and ACLs (access rights management through Access Control Lists). It also supports Kerberos, a trusted authentication management system, and LDAP, a reliable authorization management solution.

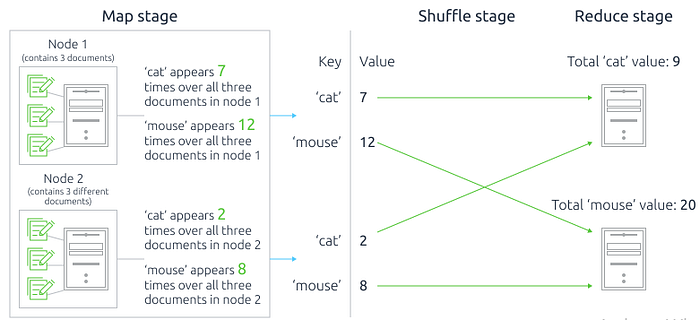

Hadoop Processing: MapReduce

Hadoop was built around the concept of batch processing. MapReduce processes data in sequences, all of which happen on various nodes (machines). These separate processing results are then combined to deliver the final output. This works great for larger datasets, where the task is distributed among many nodes. Let’s assume that we have two nodes which each have three different documents stored on them. All six documents are different to each other. We want to find out how many instances (if any) of the words ‘cat’ and ‘mouse’ appear across all six documents.

The Limitations of MapReduce

Non-Iterative Execution: Hadoop does not support iterative processing. Iterative processing requires a cyclic data flow. All you can do is either write a better MapReduce job or save the results back into the HDFS pool and include them on a consecutive run.

Persistent Storage rather than RAM: In a MapReduce operation, intermediate results are read and written to and from the disk rather than in faster RAM memory, which can make the task time-consuming — another factor that makes MapReduce unsuitable for real-time processing.

Complex, Programming: MapReduce operations are programmed in Java, the native language of Hadoop. Running MapReduce via Java is a specialized and time-consuming task. Writing mapper and reducer code in Java represents intensive and complicated low-level coding work.

Difficult to Implement Popular Query Types: The rigid key/value requirements of the MapReduce model make it difficult to run common SQL-like operations such as join, or to filter results. Although implementing such features can be accomplished in Java, it’s a non-trivial task.

Real Time Processing: The MapReduce architecture is designed by default to run on pre-loaded data saved in HDFS, rather than ad-hoc ‘streaming’ data, such as live usage statistics from a major live data network.

The Spark Approach

Spark is a general purpose analysis/compute engine that uses RAM to cache data in memory, instead of intermittently saving progress to disk and reading it back later for further calculation, as occurs with a MapReduce workload running on Hadoop.

Spark was developed out of the UC Berkeley AMPlab in 2009 and open-sourced on a BSD license the following year. In 2013, the initiative was donated to Apache for ongoing development and maintenance.

Written natively in Scala, Spark can additionally integrate with Java, Python, and R, as necessary. Spark is not limited to HDFS file systems, and can run over a range of environments, including Kubernetes. It can also run in Standalone Mode over SSH to a collection of non-clustered machines.

Though Spark can integrate with YARN, as well as alternatives like the default FIFO scheduler. Before comparing Spark’s approach to that of MapReduce, let’s examine its key elements and general architecture.

Spark Core and Resilient Distributed Datasets (RDDs)

RDDs are the heart of Apache Spark. A Resilient Distributed Dataset is an immutable array object derived from the data. Querying an RDD will create a new related RDD that carries the query information and output.

In the Spark lexicon, this event is called a ‘transformation’. Spark uses RDDs (Resilient Distributed Datasets) to ensure fault tolerance. This approach views data as a collection of objects that are distributed across nodes within a cluster. It achieves resilience through introducing lineage to RDDs, which is a history of all changes that were applied to an RDD, its parent components.

Spark SQL

Spark SQL provides a native API to impose structured queries on a distributed database, without the additional overhead and possible performance penalties of the secondary frameworks that have grown up around MapReduce in Hadoop.

Spark SQL can provide a transactional layer between the RDDs generated during a session and relational tables. A Spark SQL query can address both native RDDs and imported data sources as necessary.

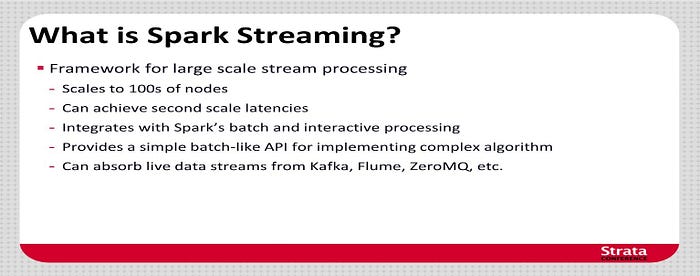

Spark Streaming

Spark Streaming enables on-demand data to be added to Spark’s analytical framework as necessary. Incoming data from sources such as TCP sockets, Apache Kafka (see below) and Apache Flume, among many others, can be processed by high-level functions and passed on to result-sets for further batch-work, back to an HDFS file system, or directly to end-user systems, such as dashboards. This kind of approach is optimal for real-time predictive analytics and other types of machine-learning tasks that require immediate output.

The Limitations of Spark

Fast, But Not Real-Time: Apache Spark does not operate in real time against streaming data. Genuine real-time solutions can be created with Storm or Apache Kafka over HBase, amongst other possible conjunctions from the Apache data streaming portfolio, as well as third-party offerings.

No Native File System: Since Hadoop has captured such a large segment of the market, Spark still runs most frequently over some flavor of Hadoop and HDFS.

Requires HDFS (or Similar) for Best Security Practices: Spark’s security is a bit sparse by currently only supporting authentication via shared secret (password authentication). The security bonus that Spark can enjoy is that if you run Spark on HDFS, it can use HDFS ACLs and file-level permissions. Additionally, Spark can run on YARN to use Kerberos authentication.

Does Not Always Scale Well: High concurrency and high compute loads can cause Spark to perform poorly. It can also run more slowly under more ambitious workloads, with out-of-memory (OOM)

Does Not Handle Small Files efficiently: The most popular cloud solutions are optimized for large sizes of file, rather than the many small files that will comprise a typical big data batch job. Spark does not have a solution to the small file problem and must depend on some enhancements that are built over spark such as DeltaLake, CarbonData or Hudi.

Reference

1. https://data-flair.training/blogs/spark-rdd-tutorial/

2. https://logz.io/blog/hadoop-vs-spark/

3. https://www.qubole.com/resources/hadoop-spark/

4. https://mapr.com/blog/spark-101-what-it-what-it-does-and-why-it-matters/

5. http://security-soft.co.uk/blog/spark-vs-hadoop-data-processing-matchup/index.html

7. http://www.agildata.com/apache-spark-cluster-managers-yarn-mesos-or-standalone/

9. https://bowenli86.github.io/

10. https://www.edureka.co/blog/spark-architecture/

11.https://hortonworks.com/tutorial/setting-up-a-spark-development-environment-with-scala/

12. https://supergloo.com/spark-tutorial/spark-tutorials-scala/#Tutorials